The typical OCS deployment these days is using Windows Server 2008 instead of Server 2003 for the host OS now since R2 and Server 2008 have been out for some time, so a certain issue has begun to pop up in some deployments. Basically, if an R2 Edge server is deployed on Server 2008 and three separate NICs are used for the three external Edge roles then some routing problems can typically be seen. Previously on Server 2003 this was not a problem, but something has appeared to change in the behavior of Server 2008.

Well here is the story. (Do not miss the final paragraph as there is an important point that might make the rest of this article moot!)

OCS Edge on Windows Server 2003

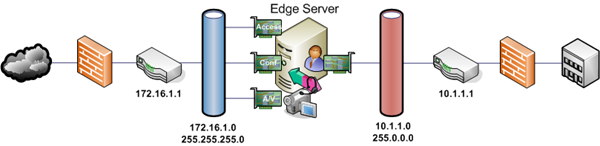

Let us first revisit a proper configuration of an Edge Server when using multiple external interfaces.

Although this example is using private IP addresses with a single consolidated R2 Edge (with a static NAT compatible firewall) the scenario still holds true if publicly routable IP addresses are used instead.

|

Interface Role |

IP Address |

Subnet Mask |

Default Gateway |

|

Internal Edge |

10.1.1.30 |

255.0.0.0 |

<not defined> |

|

Access Edge |

172.16.1.31 |

255.255.255.0 |

172.16.1.1 |

|

Web Conferencing Edge |

172.16.1.32 |

255.255.255.0 |

<not defined> |

|

A/V Conferencing Edge |

172.16.1.33 |

255.255.255.0 |

<not defined> |

Because internal networks are ‘known’ and external clients could be coming from anywhere in the public Internet IP address space (assuming Public IPs are used like in this example) then the single default gateway for the server should NOT be defined on the internal interface, but on an external interface.

Additionally, a static route would be defined to route traffic to/from the internal subnet(s). Not only do the Front-End and other OCS servers need to communicate directly with the Edge internal interface, so do any internal client workstations. For example, A/V sessions between internal and external clients travel from the internal workstation to the Edge internal interface, and out the external interface to the external client. This is why it’s important to make sure that the Edge internal FQDN is published in the internal DNS zone and not just setup on the Front-End server as a HOSTS file entry.

Since static route needs to include more than just the Front-End server, it should be define the entire, routable internal network. If multiple subnets are used internally then either a larger mask would need to be used (if all networks are configured in the same IP numbering scheme) or multiple static route will need to be added to include any and all networks where OCS servers, clients, and devices may reside.

route add –p 10.1.1.0 mask 255.0.0.0 10.1.1.1

Also we must not forget to go into the all of the external interface’s TCP/IP Advanced Properties and disable the option for Register this connection’s addresses in DNS.

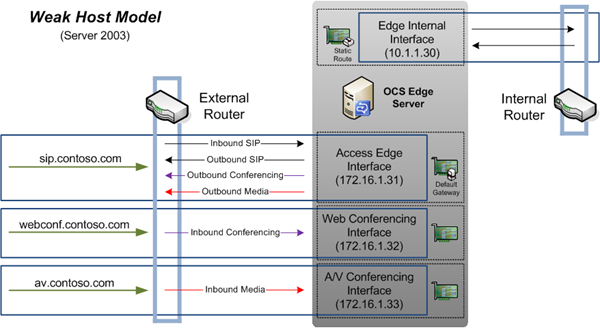

The above configuration works on Server 2003 because the operating system adheres to what is called the Weak Host Model in networking. This means that all outbound traffic leaves the server on a loosely defined ‘primary’ external interface. In our scenario the Access Edge interface would hold this title as it has the default gateway defined on it, while the other externally-facing interfaces do not have a default route defined. This means that inbound traffic from external clients which hits the Edge server on any of the different interfaces would complete it’s return trip out of the single primary interface, as shown in the diagram below:

OCS Edge on Windows Server 2008

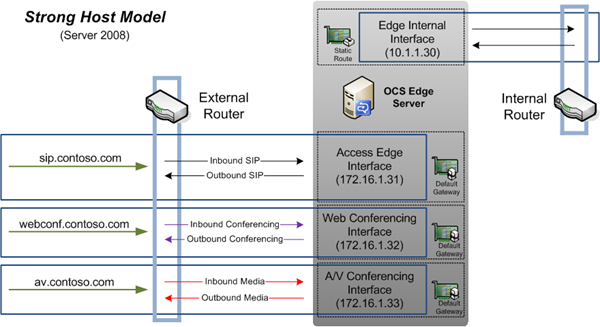

Windows Server 2008 by default now uses the Strong Host Model which no longer contains the premise of a ‘primary’ interface role among the multiple NICs. (For an excellent article on how all of this works, take a look at The Cable Guy’s article Strong and Weak Host Models.)

This now means that traffic which enters a specific interface will always be sent back out the same source interface, as shown in this diagram:

Clearly the initial benefit that can be seen is that traffic is now segregated and bandwidth more efficiently utilized than before with the Weak Host Model. External Office Communicator clients will be able to sign in and federation will function, but external Live Meeting won’t connect and no A/V and desktop Sharing features will work. This behavior also makes it easier to design routing and firewall policies as return traffic can be predicted correctly and like-communication rules can be bound together in the same firewall policies.

But using the same configuration as on Server 2003 does not work for Server 2008. (For now ignore the Default Gateway icons in the diagram above on the Webconf and AV Edge interfaces. This would then represent the typical configuration based on previous rules, but as we cover a potential resolution in the next section then those icons will come into play.)

This is because when the Web Conferencing and A/V return traffic leaves the server it will exit on the same interface it originally came in on. And since the Default Gateway is blank on those two interfaces, that traffic will not find the router and simply fail. It’s easy to confirm that behavior by watching traffic on the external firewall and by capturing packets on the server, as return traffic is lost when no usable outbound route can be found for the outgoing interface.

So there are actually two ways to resolve this in Server 2008. I’ve struggled with being able to get a ‘best practice’ recommendation from anyone on this problem, and Microsoft has yet to update any TechNet articles addressing this. The main reason is there really is not a single solution. In my opinion and from the feedback of other MVPs and SMEs in the product area it really depends on the network configuration and how the Edge Server should be configured to handle and route traffic.

Use Multiple Default Routes

One approach is to simply set the same Default Gateway on each of the external interfaces. Now normally you wouldn’t want multiple default routes defined on the same server. When multi-homing a Windows Server to different IP subnetworks (as we have here with the separate internal and external NICs on the Edge server) then factors like Dead Gateway Detection come into play, and following that general practice is correct.

But with multiple interfaces connected to the same IP subnetwork and be used to segregate traffic and not simply load balance traffic, then each adapter needs to have a valid next-hop route defined in order for the Strong Host Model to be used.

|

Interface Role |

IP Address |

Subnet Mask |

Default Gateway |

|

Internal Edge |

10.1.1.30 |

255.0.0.0 |

<not defined> |

|

Access Edge |

172.16.1.31 |

255.255.255.0 |

172.16.1.1 |

|

Web Conferencing Edge |

172.16.1.32 |

255.255.255.0 |

172.16.1.1 |

|

A/V Conferencing Edge |

172.16.1.33 |

255.255.255.0 |

172.16.1.1 |

The potential pitfalls with this solution is that any of the three interfaces could be used for initiating outbound communications. Checking the route table afterwards will show that all three defined routes have the same metric value. For responses to inbound traffic this is not a problem as the Strong Host model will dictate that the response leaves the interface it entered on and external firewalls will see the return traffic from the same IP and routing should be fine. But for initiating an outbound connection, as can happen when an internal users tries to start an IM conversation with a Federated or PIC user, needs to travel out of the Access Edge interface to match the traffic profile that firewalls would be configured for (TCP 5061 outbound). Typically this works usually since the Access Edge was the first configured external interface, but because the Strong Host Model does not officially assign a primary interface then it seems to be a little luck or black-magic.

A way to force the Access Edge interface to act as a primary default route interface would be to leave the Default Gateway set on the interface properties, and then perform a route print to identify the Metric value of that route. Then instead of adding the same Default Gateway value on the other two interfaces simply create a static route from the command line and assign a metric of higher value to each of the other interfaces. This will insure that the Access Edge interface is used for initial outbound connections but when Strong Host attempts to reply to traffic from any of the 3 interfaces there is a defined route on each interface.

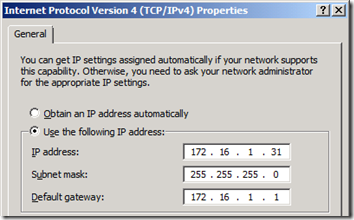

Configuring Multiple Default Gateways

Start by verifying that the Access Edge interface is the only external interface configured with a Default Gateway.

Issue a ROUTE PRINT command to identify the assigned metric for the Access Edge interface’s default route entry, as well as the defined indexes for each external NIC. In the example below the Index values are 14, 15, 16 for the external NICs and the default route’s Metric is 276.

C:>route print

===========================================================================

Interface List

16 …00 15 5d 67 a2 0f …… Microsoft VM Adapter #4 (AV Edge)

15 …00 15 5d 67 a2 0e …… Microsoft VM Adapter #3 (Webconf Edge)

14 …00 15 5d 67 a2 0d …… Microsoft VM Adapter #2 (Access Edge)

12 …00 15 5d 67 a2 0b …… Microsoft VM Adapter (Internal Edge)

1 ……………………… Software Loopback Interface 1

===========================================================================

IPv4 Route Table

===========================================================================

Active Routes:

Network Destination Netmask Gateway Interface Metric

0.0.0.0 0.0.0.0 172.16.1.1 172.16.1.31 276

127.0.0.0 255.0.0.0 On-link 127.0.0.1 306

127.0.0.1 255.255.255.255 On-link 127.0.0.1 306

127.255.255.255 255.255.255.255 On-link 127.0.0.1 306

10.0.0.0 255.0.0.0 10.1.1.1 10.1.1.30 11

Alternatively the NETSH command can be used to identify the interface indexes.

C:>netsh interface ipv4 show interface

Idx Met MTU State Name

— — —– ———– ——————————-

1 50 429467 connected Loopback Pseudo-Interface 1

12 5 1500 connected Internal Edge

14 5 1500 connected Access Edge

15 5 1500 connected Webconf Edge

16 5 1500 connected AV Edge

Issue the following persistent ROUTE commands to set higher metric values for the other interfaces.

route add –p 0.0.0.0 mask 0.0.0.0 172.16.1.1 metric 277 IF 15

route add –p 0.0.0.0 mask 0.0.0.0 172.16.1.1 metric 278 IF 16

Disable the Strong Host Behavior

An alternative approach would be to disable that default functionality and configure Server 2008 to use the Weak Host Model. This would allow the same single-external Default Gateway definition, but has some inherent drawbacks. Especially now with R2 a consolidated Edge Server can more easily host all external roles on the same physical interface (since NAT restrictions have been loosened). This being the case, a benefit to using multiple physical interfaces for each external role is to increase bandwidth and segregate traffic. Thus reverting the server back to a Weak Host Model could inherently negate those benefits. But on the flip side with an Edge server that handles a lot of AV communications (which require more bandwidth than others) then using Weak Host would effectively offer dedicated NIC for internal AV with outbound AV travelling over the ‘primary’ Access Edge interface, thus balancing A/V traffic across 2 interfaces. Keep in mind that the actual benefits may vary here depending on the duplex mode of the interface and switches since the two streams are in opposite directions.

Enabling Weak Host Send/Receive

Use the ROUTE PRINT or NETSH commands shown in the section above to identify each interface’s index value.

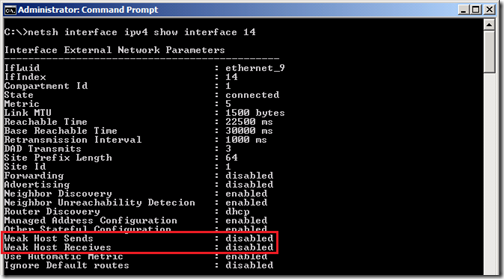

Verify each adapter’s current Weak Host settings

C:>netsh interface ipv4 show interface 14

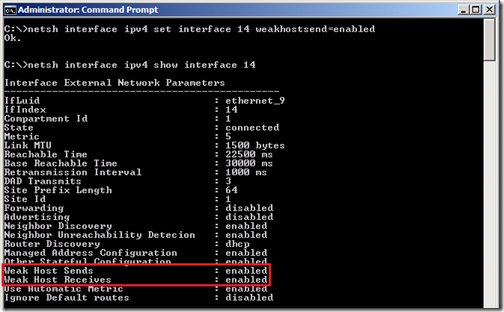

Issue a set of commands for each interface to enable Weak Host sending and receiving.

netsh interface ipv4 set interface 14 weakhostsend=enabled

netsh interface ipv4 set interface 14 weakhostreceive=enablednetsh interface ipv4 set interface 15 weakhostsend=enabled

netsh interface ipv4 set interface 15 weakhostreceive=enabled

netsh interface ipv4 set interface 16 weakhostsend=enabled

netsh interface ipv4 set interface 16 weakhostreceive=enabled

Verify the setting changes on each adapter with the NETSH command again.

C:>netsh interface ipv4 show interface 14

Summary

Although it’s a bit more complicated, attempt the first option as clients have typically configured their firewall rules to flow traffic in/out on the same interfaces. And in some instances I’ve seen clients simply set the default gateway values on each interface’s properties without mucking around with metrics and command line strings, and everything just worked fine. Go figure!

But if internal routing issues seem to prevent that approach from working, then disabling Strong Host will also get the job done and is an easier configuration on the server side. Changes may need to be performed on the firewall to handle inbound and outbound traffic of the same type to/from different IPs, but being armed with this information ahead of time though can make this an easier process.

So all this stuff leads me to one important point that can save a ton of work and headaches up front. Simply ask the question: “Why are there 3 externally-facing physical interfaces in the Edge server?”

With NAT support for consolidated Edge in R2 there are now even less deployments with separate physical external interfaces (due to different subnetworks connected to AE/LM and AV roles, but even that scenario is slightly different because the of the unique networks which don’t share the same default route anyways). Physical traffic segregation and bandwidth are the only real arguments for having multiple external interfaces, and with gigabit interfaces in servers being the norm these days bandwidth limitations might be perceived more than actual. Besides, once that level of traffic flow is reached it is typically a better approach to deploy a second Edge server and expand into a pool than simply add multiple interfaces to a box that still has to flow all of that data back into a single NIC to connect to the internal OCS servers after all. So if any single recommendation were to come out of this article it would be to make sure that 3 external interfaces are really needed in the first place!