This article is a continuation on a series of articles dedicated to the H.264 Scalable Video Coding (SVC) video codec implementation utilized by Lync 2013. Previous article introduced the standards-based codec and the possibilities it contains based on the defined specifications. Additional articles, like this one, take a closer look at how the actual implementation of the codec in Lync 2013 operates.

Temporal Layers

The concept of temporal scaling in H.264 SVC was introduced in this article at a marginally high level. Microsoft consultants Danny Cheung and Mariusz Ostrowski also cover this topic briefly in their video deep dive session at the recent Lync Conference, which can be seen at about 35 minutes into this recording.

The benefit which SVC provides here is that a single encoded video stream can incorporate multiple frame rate requests. While the H.264 SVC codec specifications defines for support of many additive layers the actual implementation of this codec in Lync 2013 supports a maximum of two temporal layers per video stream.

The Base Layer which is identified with a Temporal ID (TID) of ‘0’ must been sent to the decoder at a minimum, providing video in the frame rate encoded for that layer. The base layer provides all the data needed to reconstruct a video stream at only the single frame rate provided in the single stream. This layer includes the initial Intraframes (I) which are key frames that contain all the needed data for a given single frame of video. The subsequent Predicative frames (P) include only the data which has changed since the previous ‘I’ frame was sent, which are basically the deltas of video data. After a set number of P frames are sent a new I frame is encoded to refresh the complete frame image.

For an additional frame rate request a single Enhancement Layer can be added to the same stream which includes even more ‘P’ frames, effectively doubling the frame rate. The enhancement layer alone is useless as it is only comprised of the interim predicative frames; thus it must be sent alongside the base layer to be of any value to the decoder.

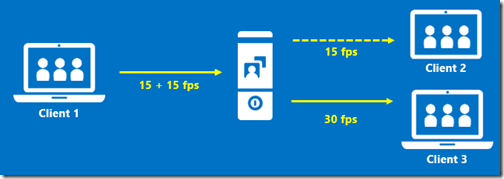

If a Lync 2013 client participating in a multiparty conference call receives requests for two different frame rates from multiple parties of the same resolution then the encoding client can provide for both requests in a single video stream. It does this by encoding a specific frame rate in the base layer and then using the same frame rate value in the enhancement layer. Then for clients requesting the lower value the AVMCU will only forward on the base layer to those clients, meanwhile any clients requesting a higher value will be given both the base and enhancement layers be the AVMCU.

In the basic scenario where an encoder is attempting to supply requests for both 15 fps and 30 fps it will encode video at 15 fps in the base layer and then additionally encode video at 15 fps again in the enhancement layer. For decoders requesting 15 fps the AVMCU will only forward the base layer on to those clients, while other clients asking for 30 fps would be sent both layers by the AVMCU. Those clients would receive two temporal layers of 15 fps each to produce a 30 fps stream (15 + 15).

These individual layers would not have the same data, but would contain unique individual frames. Think of it as every other frame encoded from the camera is placed into alternating layers. So frame A would go into layer 0, then frame B into layer 1, then frame C would be placed back into the base layer 0, and frame D in the enhancement layer 1, and so on. Decoding layer 0 would result in receiving frames A, C, E, while decoding both layers 0 and 1 together would provide all of the encoded frames of A, B, C, D, E, F, and so on.

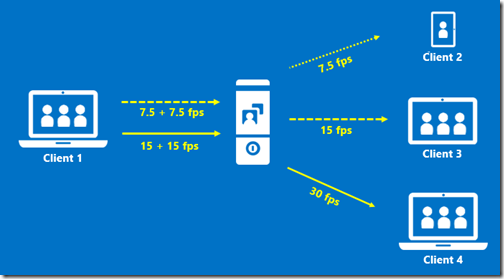

H.264 SVC in Lync 2013 supports a third frame rate of 7.5 fps and one might think that that lower request could be extrapolated from the above stream, but this is not the case. Even though 7.5 is evenly divisible from the encoded 15 fps rate in the above example the data is not packaged in a way that individual frames can simply be dropped. In earlier articles this concept may have been over-simplified to give the impression that the AVMCU can simply drop half of the individual frames to cut 30 fps streams down into 15 fps, for example, and then again by half down to 7.5 fps. But this was not the intention, and this article should help clear up the fact that the flexibility of the codec lies completely within the individually packaged layers. The AVMCU can only work with the entire layers by choosing to either forward or strip the single enhancement layer.

So for the rare occasions when a client is asking for 7.5 fps then the encoding client would need to send an additional, complete video stream with a pair of temporal layers encoded at different frame rates. The effectively doubles the work that the encoding client must do as it’s no encoding the video twice, but the impact on bandwidth is not actually doubled as the second stream providing lower frame rates would be comprised of less data when compared to the higher frame rate stream.

As Lync Server matures in future versions and device processing power increases it is quite possible that this same benefit in providing multiple frame rates could be expanded into providing multiple resolutions as the SVC codec. This is called as Spatial Scaling and is defined in the codec’s design specifications, showing how SVC has plenty of room for growth and is only partially utilized in its current implementation.

![image_thumb[24] image_thumb[24]](http://blog.schertz.name/wp-content/uploads/2014/03/image_thumb24_thumb.png)

Hi,

Firstly thanks for the pieces, always fun to read.

You mention that the client encoded 15fps basic + 15fps for any client that wants it in the same stream. However if any viewer wants 7.5 fps the client has to encode a separate 7.5 fps stream – so far so good… In the last diagram you have the two streams but list the 7.5 fps twice?

i.e 7.5 fps + 7.5fps, what is this second 7.5 fps used for?

Ben

I wondered how quickly someone might catch that 🙂 Actually I’m not 100% sure on that one as I’ve been unable to get a definitive answer from Microsoft. From my research I believe there are one of two possible outcomes here. Either (1) the enhancement layer is always present in the video stream, and also requests for 7.5 fps, while possible in Lync, are very rare. All clients support at least 15 fps so a scenario where 7.5 fps would be requested would most likely be related to very poor network connectivity or some other heavy restriction. So I believe that the 7.5+7.5 base+enhancement stream is the least that a 2013 client can send, which will provide video to both 7.5 fps and 15 fps requests from any other clients in the call. Alternatively (2) it may be possible that a single base layer of 7.5 fps is sent in the event that only one other participant is on the meeting which is requesting only 7.5 fps at the time, and then when addition clients join the enhancement layer is added to the stream to fulfill 15 fps requests. For additional 30 fps requests then another new stream would be invoked as explained in this article.

Hi!

Thank you for your great job!

Could you clarify the following aspect. We can have various aspect ratio, resolutions and frame rate. In your article you write that in Lync 2013 there are only two temporal layers per video stream. The question is how many streams will client send if it is required, for example, in next video quality:

16:9 1080p 30 fps

16:9 1080p 15 fps

16:9 480p 15 fps

4:3 640×480

3:4 480 x 640

Sergey

It depends on a variety of criteria and requests, most of which is not documented anywhere. The theoretical limit is supposed to be no more than 5 encoded streams.