The covers have been removed from Lync ‘Wave 15’ and the general public is now privy to download and install a preview version of Lync Server 2013. This presents the opportunity to finally discuss a topic which has been broadly misunderstood for quite some time throughout the industry: what does “H.264 AVC/SVC” support mean exactly in the upcoming Lync platform, and how might it relate to the rest of the video conferencing industry? Microsoft has been talking about introducing a standards-based video codec as a replacement for their proprietary Real-Time Video (RTV) video codec for some time and there have even been announcements released and specification documents published in the past year, but until last week those statements were never officially confirmed. But a brief look at the newly released features will show quite a focus on the video experience in the next Lync platform.

Yet the simple declaration that “Lync 2013 supports H.264 video” tends lead one to believe that the next version of Lync will be able to connect to any existing standards-based video conferencing systems, doing away with the need for expensive gateways. Well, that assumption would be completely incorrect as there is much more to the story then simply providing a new video codec in the product.

But before going into too much detail on how Microsoft is utilizing H.264 it is important to understand some of the history and background around video conferencing in the industry.

Basic Concepts

In order to establish a video call between endpoints a series of events must be able to successfully take place over a variety of protocols and systems. One of the most basic concepts to understand is that these communication sessions are generally comprised of two separate types of communication streams: signaling and media. Signaling is primarily control traffic that typically (and specifically in Lync) will traverse some sort of server component that facilitates the discussion between 2 or more endpoints. Media is a separate session that may follow the same path as the signaling traffic (in the event of multi-party conference calls hosted on a server) or may be transmitted directly between both endpoints completely bypassing any central components (in the event of a native peer-to-peer call). In some video integration scenarios both the signaling and media will traverse through one or more gateways which may also even perform some level of transcoding in the media payload. In other scenarios a third-party system may be capable of communicating directly to the server component in native fashion without the need for signaling translation and/or media transcoding.

Signaling

For Lync interoperability any endpoint supporting native integration (from Microsoft or a third-party) must be able to speak Microsoft’s specific implementation of Session Initiation Protocol (SIP) in order to interact with Lync. Although Microsoft Lync is based on SIP standards, there are a number of extensions included in the stack which are unique to the platform. For a gateway-based integration any endpoints which are not able to speak directly to Lync are connected through at least one gateway which handles the translation of Microsoft SIP to other standards-based signaling protocols which support video, like H.323 or SIP.

So the key concept to understand in this section is that unless a third-party endpoint or solution can natively speak the specific Microsoft ‘dialect’ of SIP then a gateway which can speak Microsoft SIP is required.

Media

The actual negotiation of the media session is controlled by Session Description Protocol (SDP) information embedded in the SIP signaling, which allows the two endpoints to decide which media codecs to use and what IP addresses and ports to send their outbound media streams to. But the established media session is made up or more than just the media itself (e.g. video frames). Media sessions in Lync utilize Real-time Transport Protocol (RTP) and Real-time Transport Control Protocol (RTCP). While RTP carries the actual media stream, RTCP is used to facilitate the handshake, control the media flow, provides statistics about the media sessions, and more. So even if two systems share a common video codec that does not automatically indicate by default that the transport protocol used by each are also compatible.

If an endpoint supports native registration to Lync and contains a compatible media codec and transport protocol then the media session would be able to travel directly between the two endpoints. This is a completely native interoperability scenario which partners like Polycom and Lifesize leverage.

But if there is no compatible codec in common, or the transport protocols are not compatible then additional gateway services will need to be leveraged to transcode the media session between the two endpoints. For example if a Lync client is attempting to initiate a peer-to-peer video call with a third-party video conferencing system and they have no video codecs in common then a gateway service will need to transcode the video session between those two codecs. Clearly that gateway would need to support both codecs and thus act as an intermediary. The media path in this scenario is also proxied through the gateway and is not peer-to-peer. Third-party vendors like Cisco (Tandberg) and Avaya (RADVISION) utilize this gateway approach to provide either separate signaling and media gateways, or a single gateway that handles both types of traffic.

Video Codecs

From the time that video was first introduced in the Communications Server platform there have only ever been two video codecs supported: Real Time Video (RTV or VC-1) and H.263, the latter only being available on the Windows client and not on the Mac client or any servers. For additional details on Video functionality in Lync see this previous article, but the key point here is that RTV can support a range of video resolutions from low quality QCIF through high-definition 720p, while the legacy H.263 codec support provided in the Windows Lync client is limited to low-resolution CIF.

In the traditional video conferencing industry a host of codecs have been supported throughout the decades, the most common being H.261, H.262 (MPEG-2), H.263, and most currently H.264 (MPEG-4 AVC). (The upcoming H.265 codec is in draft status and is still under development.) The remainder of this article will focus on H.264 and its various capabilities.

H.264 Advanced Video Coding

The H.264 AVC (Advanced Video Coding) family of standards is commonly used today for everything from video conferencing to Blu-Ray discs to even YouTube videos. But its many versions are not all the same and there are specific rules for compatibility and backwards-compatibility between these various iterations. Also, be careful with the term “standards-based” as this can be used to mean different things depending on the context. Just as Microsoft’s specific implementation of SIP was ‘based on a standard‘ it is not necessarily an industry standard implementation; more on this point later.

This standard includes a variety of different profiles, levels, and versions. Some of the specific versions also introduce additional modes as well, so keeping it all straight can be a daunting task. As of earlier this year the H.264 video standard is comprised of no less than 16 different versions, with the initial approved version supporting the most basic capabilities throughout three unique profiles: Baseline, Main and Extended. The large majority of current generation video conferencing equipment today supports the Baseline profile, and while some devices may be capable of further encoding and decoding higher profiles like Main or Extended it is not very common. In version 3 a new profile called High was introduced and provides for even further video compression, at the cost of some additional processing load, but realistically can reduce the media bandwidth requirements by up to 50%. (On a daily basis I participate in video conferences from my home office at 720p/30fps over bandwidth as low as 512kbps to 768kbps using a Polycom HDX which leverages High Profile. By comparison a properly equipped Lync client capable of sending and receiving 720p video using RTV could utilize up to 1.5 Mbps for the same call.)

H.264 Scalable Video Coding

Where things start to get interesting is with version 8 when Scalable Video Coding (SVC or Annex G) was introduced into the family. The SVC extension adds new profiles and scalability modalities, the latter of which are defined as: Temporal, Spatial, SNR/Quality/Fidelity, or Combined. Some or all of these different formats can be leveraged in a specific implementation to provide a desired functionality.

The new scalability behavior provides for an enriched conferencing experience by allowing users to interactively view varying levels of video quality on-demand and not require real time decoding and re-encoding of video streams. This approach places most of the processing on the endpoints (they are already encoding the streams anyway) but by introducing more intelligence into the endpoint the need to centrally decode and re-encode video can be eliminated. The biggest advantage to this approach is providing different endpoints the ability to display the resolution and frame rate best suited for a given scenario. A central conferencing engine can then change roles from a traditional encoding bridge into an advanced relay agent, sending the desired streams (or portions of streams) to the appropriate endpoints.

Basically the way that SVC works is the media stream is comprised of individual, complementary layers. Starting with a Base Layer that provides the lowest usable resolution and frame rate that any other endpoint compatible with SVC should be able to display. Higher quality options are then provided by one or more Enhancement Layers which are included in the same stream on top of the base layer.

These individual layers are additive, meaning that there is no unneeded duplication of information between the layers. The total bandwidth required to send a video stream should be roughly the same as a non-SVC stream of the same maximum quality.

Note: The actual parameters of the individual layers are dependent on the specific application so the resolutions and rates shown in the following diagram are simply examples used for illustration and in no way define the actual capabilities of different endpoints within Lync or any other SVC-compatible solution available today. The concept of ‘building-up’ additional layers to provide multiple levels of quality is what is important to take away from this section.

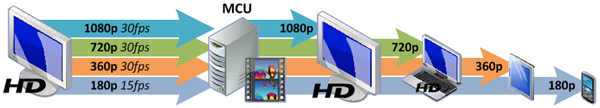

In the following example an encoding endpoint capable of sending up to 1080p resolution is connected to a conference hosted on a Multipoint Control Unit (MCU). Also in the same conference call are four other participants with various supported endpoints capable of displaying different levels of video. The base layer is encoded at a low resolution of 180p at only 15 frames per second, while multiple enhancement layers are added for additional resolutions all the way up to 1080p at 30fps.

In a multi-party conferencing scenario which utilizes SVC each endpoint can ask the MCU for only the highest resolution, frame rate, or quality they can each support for a given stream. The MCU will then send the desired layer and all lower layers to the other participants. As the mobile phone might be limited to displaying low resolution in this scenario then it is sent only the base layer and no additional data, whereas the laptop capable of viewing 720p is sent the first three layers. Multiple layers are sent because each higher enhancement layer only includes the delta of data above the lowers layers, so all layers are required to reassemble the complete frame for that specific layer. Only higher layers are stripped away by the MCU or ignored by an endpoint.

Note: This diagram is an over-simplification of the layering process in SVC as depending on the specification there may actually exist multiple simultaneous streams, each with its own individual layers. This example could be a single stream of four layers or two separate simultaneous streams of multiple layers. Depending on the type of scaling supported and the range of different options required not all of the data may always be provided in a single stream.

Because the source encoding endpoint in this example supports sending as high as 1080p/30 this does not mean that it will do so all the time. If the MCU is aware that no other participant either supports that resolution or is not currently asking for that resolution then the sending endpoint does not need to waste bandwidth by sending a layer to the MCU which is not being consumed. Only the enhancement layer providing the highest level of information, and all layers below that, would need to be sent to the MCU.

The immediate benefit of this architecture is that the MCU no longer has to perform any transcoding of the media streams in order to repackage each outgoing stream in the best possible configuration for every individual endpoint. This reduces the processing load on the central ‘bridge’ and turns the system into a Media Relay. Different adaptations of SVC may still include traditional transcoding capabilities for interoperability with non-SVC participants, while systems without transcoding abilities would typically be limited to providing a “lowest common denominator” experience where all participants would see the video sent by the encoding endpoint at whatever resolution is it able to send at, regardless of their own receiving capabilities.

As more and more disparate endpoints are able to connect to the same video conferences then the advantages of scalable video are further evident, yet as with anything there is always a trade-off. This scalable approach does place a higher processing cost on all systems involved as there is clearly a lot more intelligence involved here. In addition to the increased encoding work the endpoints are on the hook for send any and all resolutions desired (and supported) in the call. In a traditional conference the heavy lifting can be placed on the MCU, providing transcoding and upscaling, to reduce the load on the endpoints and network.

Yet displacing the majority of the workload from a centralized MCU out to the endpoints as well as providing multiple active video sources to endpoints does often present some sticker-shock to claims of “1080p multiparty video”. How can a single endpoint support seeing multiple video streams at high resolutions without crippling the network? The answer is simply that this does not happen, as viewing resolution is always limited by real estate. In the case of viewing video on either a 15” laptop screen or a 50” LCD display both capable of displaying 1920×1280 pixels then this is the upper limit of pixels which can be used to show video. It is silly to think that this monitor would be able to display four continuous streams of HD video as the maximum horizontal dimension of 1280 pixels is only divisible by 720 pixels (the horizontal pixel value for 720p HD) 1.5 times. This means that only one 720p video and only half of a second video window would fit on the this screen at full resolution. And when dealing with desktop video conferencing unless there is a secondary monitor attached to display the video window then there is no room left to see any other running applications.

Note: The term “High Definition” (HD) is thrown around often to mean different things, but technically it defines a video resolution ‘higher than that of standard definition’ (SD). The aspect ratio alone cannot always be used to clarify the level of definition as SD can include some widescreen (16:9) formats. The overall pixel count and interlacing modes are more important to identifying the definition type of a given resolution.

Traditionally in video conferencing VGA (640×480) and 480i (720×480) are where SD ends and 720p (1280×720) is where HD begins. There is also a mid-tier resolution of 480p (720×480) which is classified as “Enhanced Definition” but is typically not used in video conferencing.

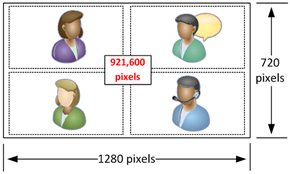

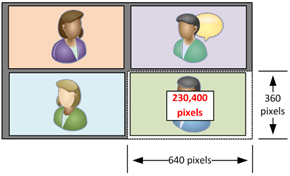

The following diagram illustrates how viewing multiple active video streams does not exponentially increase bandwidth utilization as additional participants join the conference. Using an example resolution of 720p (1280×720 pixels) there are a limited number of pixels (921,600) available for which to display video.

-

On the left side a traditional MCU has encoded the four separate participant into a single video stream at 720p resolution and the receiving endpoint is able to display the video in full resolution.

-

On the right side an SVC enabled conference with the same participants is shown, where as this time each tile is actually a separate VGA stream delivered directly to the endpoint. The four individual sessions can be laid out on the screen based on the client software capabilities.

- Where things start to get more complicated is how the overall bandwidth could by calculated and compared between these calls. If in this example RTV was used then the single 720p session would require between 900-1500 kbps while VGA (640×480) sessions in RTV typically consume between 300-600 kbps. This estimates out to roughly 2.5 to 3 times more bandwidth required when moving from standard definition VGA to high definition 720p. Yet a single 720p stream would be a maximum of 1500kbps while four simultaneous VGA streams could be up to 2400kbps at maximum (600 * 4).

- The actual mathematics behind calculating video bandwidth is much more complex than this basic explanation, but the concepts are still applicable. Thus exactly just how ‘scalable’ is scalable video coding? As more participants join a conference and attempt to send media the experience will require even more media sessions and additional bandwidth. Although given the same original piece of screen real estate is used then each participant’s video representation will need to shrink in order to fit more participants on the screen, allowing each stream to utilize an even lower resolution. For example moving up to 16 concurrent video sessions would limit the maximum usable resolution to 320×240 pixels per tile, yet the signaling and processing involved to negotiate that level of concurrency may be more that a given platform could be designed to handle.

UCI Forum

Back in April 2010 a number of industry leaders (Microsoft, HP, Polycom, LifeSize) got together to form the Unified Communications Interoperability Forum (UCIF) focused on creating a set of specifications and guidelines in which companies can utilize to build and adapt their solutions around a common set of interoperable protocols. The forum’s vision is to deliver a rich and reliable Unified Communications experience, initially focusing on the video experience.

What is important about this venture is that Microsoft has since announced they would be adopting H.264 SVC technology from Polycom. They have also published a specifications document, entitled Unified Communications Specification for H.264 AVC and SVC UCConfig Modes. The latest draft (v1.1) of which can be found here.

UCConfig Modes

Although this specification document contains a lot of information the most important part for this article is the defined UCConfig Modes which relate to the various scalability modalities introduced in this standard.

- UCConfig Mode 0: Non-Scalable Single Layer AVC Bitstream

- UCConfig Mode 1: SVC Temporal Scalability with Hierarchical P

- UCConfig Mode 2q: SVC Temporal Scalability + Quality/SNR Scalability

- UCConfig Mode 2s: SVC Temporal Scalability + Spactial Scalability

- UCConfig Mode 3: Full SVC Scalability (Temporal + SNR + Spatial)

What these multiple modes define are differing levels of video scalability with additional processing requirements placed on each higher level, but with the returned benefit of reduced overall bandwidth. Take note that the multiple streams at different resolutions are additive so that the overall bandwidth is not three separate complete streams, as the data from the lower quality stream is used to ‘fill in the gaps’ in the higher quality stream. So an endpoint asking to display a 720p stream would be relayed all streams underneath that resolution as well so it has the ‘complete picture’.

Mode 0 means that no scalability is provided. It still supports multiple independent simulcast streams generated by the encoder, so although the scalability features of SVC are not provided in this mode the specification allows for multiple streams for each resolution requested, at a specific frame rate.

Stream Base Layer 1 720p 30fps 2 360p 30fps 3 180p 15fps

Mode 1 introduces Temporal Scaling which provides an endpoint the ability to send a single video stream per resolution for multiple frame rates. This means that if the endpoint is asked to send two separate resolutions (e.g. 720p and 360p) then it will send two separate video streams at the same time, but the receiving end can display either 30fps, 15fos, or 7.5fps by simply dropping entire frames of the video sequence. So if 30fps is the highest frame rate sent then the receiving end can display the video at 15fps by dropping every other frame, or display 7.5fps by dropping 2/3rds of the frames. It should be noted that the basic H.264 AVC standard already supports some level of temporal video scaling but it was improved upon in the SVC version.

- The following diagram depicts how a decoding endpoint would utilize Temporal Scaling to display either 30fps (blue arrows) or 15fps (red arrows). To display 7.5fps every 5th frame would be used (the first and last frame in this example).

Stream Scalable Layers 1 720p 30fps 720p 15fps 720p 7.5fps 2 360p 30fps 360p 15fps 360p 7.5fps 3 180p 15fps 180p 7.5fps

Mode 2q first applies the benefits of Mode 1 to the stream and then introduces Quality Scaling which encodes additional levels of image quality at the same resolution and frame rate as Mode 1. There still exists a separate stream for each resolution (e.g. 3 streams to send 720p, 360p, and 180p) but each stream may include different qualities for a specific resolution and frame rate. A Quantization Parameter (QP) is used to define the level of processing applied to each stream.

The following images illustrate in a simplified manner the results between different QP values (a higher value indicates lower quality).

Stream Scalable Layers 1 720p 30fps

Quality 1720p 30fps

Quality 0720p 15fps

Quality 02 360p 30fps

Quality 1360p 30fps

Quality 0360p 15fps

Quality 03 180p 30fps

Quality 0180p 15fps

Quality 0

Mode 2s, like 2q, applies Mode 1 first but where it differs is that instead of providing various Quality levels it leverages Spatial Scaling to intermix multiple adjacent resolutions into the same stream. This provides for more resolution choices (5 different resolutions in the example below) but does not increase to 5 separate streams.

The following images illustrate in a simplified manner the difference in resolutions provided in each individual stream.

Stream Scalable Layers 1 720p 30fps 480p 30fps 480p 15fps 2 360p 30fps 240p 30fps 240p 15fps 3 180p 30fps 180p 15fps

Modes 2s and 2q are not additive, so only one version or the other could be used at one time. Thus Mode 3 is defined as a combination of features included throughout the lower modes providing the benefits of temporal, quality, and spatial scaling across even fewer simultaneous streams. The table below shows a single stream which can now incorporate a mix of adjacent resolutions, multiple frame rates, and different quality levels.

Stream Scalable Layers 1 720p 30fps

Quality 2720p 30fps

Quality 1480p 30fps

Quality 1480p 30fps

Quality 0480p 15fps

Quality 02 360p 30fps

Quality 0180p 30fps

Quality 0180p 15fps

Quality 0

Resolutions

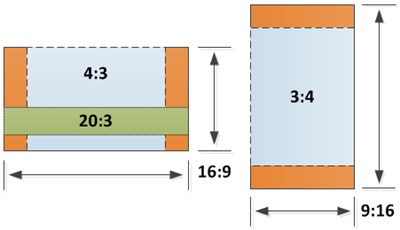

The specification document also lists a large variety of supported resolutions, including both landscape and portrait orientations.

Aspect Ratio Resolutions 16:9

Widescreen1080p

1920 x 1080720p

1280 x 720540p

960 x 540480p

848 x 480360p

640 x 360270p

480 x 270240p

424 x 240180p

320 x 18090p

160 x 904:3

NormalVGA

640×480424×320 QVGA

320 x 240QQVGA

160 x 12020:3

PanoramaPano288p

1920 x 288Pano192p

1280 x 192Pano144p

960 x 144Pano96p

640 x 969:16 1080 x 1920 720 x 1280 540 x 960 480 x 848 360 x 640 270x 480 240x 424 180x 320 90x 160 3:4 480 x 640 320 x 424 240 x 320 120x 160

By comparison RTV is limited to providing only a few different resolution options across the same landscape aspect ratios, highlighting just how many new options are available in the UCConfig specification of H.264 SVC.

Aspect Ratio Resolutions 16:9

Widescreen720p

1280 x 7204:3

NormalVGA

640 x 480CIF

352 x 288QCIF

176 x 144Panorama CIF

1056×144

Lync 2013 Video Capabilities

Clearly the UCConfig specification defines a large variety of modes and options, yet this does not mean that Lync 2013 utilizes the entire set of available features. In fact the implementation of H.264 AVC/SVC provided in Lync 2013 appears to only utilize some of the levels, according to this published documentation available in MSDN (under the RTCP specifications) which is related to the H.264 AVC/SVC UCConfig Mode Specification: 2.2.12.2 Video Source Request (VSR).

In this section there exists a specific definition for the maximum UCConfig Mode value that a receiver can support and the valid values are either UCConfig Mode 0 or UCConfig Mode 1. Values of 2 are larger are specifically not allowed.

This would seem to indicate then that Lync 2013 will support UCConfig Mode 0 (for backwards compatibility with H.264 AVC-only compatible systems) while leveraging only Temporal Scaling in Mode 1 for multi-party video conferencing sessions using Lync 2013 clients and servers.

In the New Client Features chapter of the Lync Server 2013 Preview documentation the following items are stated under the Video Enhancements section.

- Video is enhanced with face detection and smart framing, so that a participant’s video moves to help keep him or her centered in the frame.

- High-definition video (up to 1080p resolution) is now supported in conferences.

- Participants can select from different meeting layouts: Gallery View shows all participants’ photos or videos; Speaker View shows the meeting content and only the presenter’s video or photo; Presentation View shows meeting content only; Compact View shows just the meeting controls.

- With the new Gallery feature, participants can see multiple video feeds at the same time. If the conference has more than five participants, video feeds of only the most active participants appear in the top row, and photos appear for the other participants.

- Participants can use video pinning to select one or more of the available video feeds to be visible at all times.

- Presenters can use the “video spotlight” feature to select one person’s video feed so that every participant in the meeting sees that participant only.

- With split audio and video, participants can add their video stream in a conference but dial into the meeting audio.

These items primarily focus on the new Gallery experience where a maximum of 5 video participants can be viewed at the same time when joining a Lync 2013 conference call. The first enhancement worth noticing is that video sessions in conference calls (hosted on the Lync AVMCU) can now scale up to 1080p resolution, which is not possible in Lync 2010 or OCS. Secondly multiple active-speaker video streams are supported, which was also limited to a single active-speaker in previous server versions.

These capabilities are made possible by leveraging SVC and not by the introduction of any increased transcoding abilities of the Lync AVMCU.

In the New Server Features chapter the following items are also listed under the New Video Features section.

- HD video – users can experience resolutions up to HD 1080P in two-party calls and multiparty conferences.

- Gallery View – in video conferences that have more than two people, users can see videos of participants in the conference. If the conference has more than five participants, video of only the most active participants appear in the top row, and a photo appears for the other participants.

- H.264 video – the H.264 video codec is now the default for encoding video on Lync 2013 Preview clients. H.264 video supports a greater range of resolutions and frame rates, and improves video scalability.

- The first statement seems a bit confusing as in the new client features section a resolution of 720p was stated as the limit in conferences, so this may indicate that 1080p resolution is supported in 2-party peer to peer calls only. This seems logical as Lync 2010 peer-to-peer calls could support 720p and conference calls were limited to VGA so it appears that by leveraging H.264 AVC/SVC instead of RTV both of those limitations can be increased by some factor. This also may point to the use of some hardware acceleration to provide these higher resolutions that RTV was not capable of, but that is only an educated guess as there is no mention of hardware-acceleration in the available 2013 documentation.

High definition video is now available when connecting to multi-party conferences hosted on a Lync 2013 server. This may not mean though that legacy clients which only support RTV will be able to leverage HD resolutions as RTV is still limited to VGA on the Lync AVMCU.

The Lync Server 2013 AVMCU does not display a single high resolution video stream incorporating multiple participants, traditionally called Continuous Presence, in the way that third-party video conferencing bridges can by decoding and re-encoding multiple streams. These conferencing solutions can provide for many more than 5 endpoints simultaneously in a single video stream comprised of various standards-based room systems, immersive telepresense rooms, mobile clients, and even Microsoft SIP clients like OCS or Lync. (The Lync 2013 native video conferencing experience and capabilities will be covered in more detail in a future article.)

Video Interoperability

At this point it should be pretty clear that even though Lync now supports the H.264 video codec, the signaling interoperability is still a key component. Without that level of connectivity first being addressed (via either native registration or gateways) there is no chance of negotiating any type of communication between the disparate systems.

Additionally to revisit an earlier statement although it appears that UCConfig Mode 0 support provides some interoperability with H.264 AVC, exactly what is that level of compatibility? While the media payload as defined by the UCConfig specification should be compatible with any other video conferencing system that already supports H.264 AVC Base Profile that does not necessary mean that the media control protocols are completely compatible. Thus even if a currently available signaling gateway or natively registered endpoint is able to connect to Lync Server 2013 it may yet still not be able to negotiate media even if both support H.264 Base Profile. An existing media gateway could be updated to address this but the traffic would still need to proxy through the gateway (for control protocol reasons, not media transcoding) in the event that native registration is not utilized.

Realistically this is what the creation of the UCI Forum and then publishing the UCConfig specifications was designed for, so that third-parties and partners can update their solutions to interoperate with this new specification. So although this is not a silver bullet for Lync and third-party video interoperability it is much closer than what was previously available as by moving away from the proprietary RTV codec and moving towards the open-standards base H.264 codec the amount of work required to design and qualify Lync-compatible systems is greatly reduced.

As these new features and capabilities in Lync Server 2013 will certainly bring video conferencing to the forefront of the desktop UC experience Lync will still rely on partners to continue to provide interconnectivity to the masses of existing video conferencing solutions as well as add additional video conferencing value and performance to the overall platform. Microsoft has made the first strides in adopting an industry-standards based scalable video codec and it is now up to the rest of the players in the industry to decide if they will follow suit. It is also worth mentioning that if other vendors go higher up the SVC scale to adopt capabilities available in Modes 2 or 3 then as long as the defined UCConfig specifications are adhered to then those systems will still be backward compatible to the Mode 1 usage available in Lync 2013.

The bottom line is that traditional third-party video systems will still need to either continue supporting native registration or continue utilizing a signaling gateway to communicate with Lync 2013.

[…] in Lync 2013 : Jeff Schertz’s Blog Posted on July 23, 2012 by johnacook http://blog.schertz.name/2012/07/video-interoperability-in-lync-2013/?utm_sou…:+jschertz+(Jeff+Schertz) Share this:StumbleUponDiggRedditLike this:LikeBe the first to like […]

Thanks for the great write-up Jeff. Great content, and broken out into digestible pieces. Thanks.

Great writeup.

[…] 2013 will Support the industry Video Standard H.264 AVC/SVC. As Jeff Schertz points out in his excellent post on this subject however, this does not mean Lync 2013 will be “..able to connect to any existing standards-based […]

[…] Video Interoperability in Lync 2013: Jeff Schertz’s Blog Jeff’s how-to posts are among the best you’ll find on Lync Server, period. His latest post addresses Lync Server 2013′s interoperability with other video conferencing technology out there. And WOW is it detailed! He dissects video codecs, signaling and media to illustrate how video streams work. […]

Stellar post Jeff. I was curious about video compatibility after reading some of the 2013 Preview's documentation. Think hardware manufacturers can get away with firmware updates to support 2013?

Oh, and I linked here from our Lync Insider blog this week. Too thorough a post not to.

For devices which support Lync interop at a signaling level it should just be a new update required when 2013 is released in order to be compatible with any changes in the signaling communications which were introduced in 2013 to facilitate changes in video capabilities.

Hey Jeff, great job on this man.

Good article

[…] Video Interoperability in Lync 2013: http://blog.schertz.name/2012/07/video-interoperability-in-lync-2013/ Lync Server 2013 Preview – 5 Feature Changes Coming: […]

[…] Video Interoperability in Lync 2013: http://blog.schertz.name/2012/07/video-interoperability-in-lync-2013/ Lync Server 2013 Preview – 5 Feature Changes Coming: […]

Great write-up. It would be neat to see a comparison of how Lync's SVC implementation compares to Vidyo's.

Thank you Jeff, great job!

Sadly I've just bought the RTV option for a Polycom HDX-8000…

I didn't know that the RTV option disables the internal MCU of the HDX.

Because we don't own an RMX for hollywood squares only the active speaker is visible during an RTV call with Lync 2010.

Can you tell me if the HDX-Client will be able to show multiple video feeds (HD) at the same time with Lync 2013 without using an RMX.

Thank you!

Kind regards,

Wolfgang

Hi Jeff,

Great article and big fan!

I was hoping that you could give me a comparison of Lync and CMA desktop. I am struggling to see any differences other than the initial investment required for Lync as opposed to just buying a CMA appliance.

Any ideas?

Thanks!

Hi Jeff, fantastic information.

Regarding the confusion about 720p vs. 1080p for conferences: The "What's New for Clients" page has been updated since you wrote this to correctly reflect that Lync 2013 supports up to 1080p for conferences (as well as P2P). It is now in agreement with the "New Video Features" page.

Thanks for the clarification Doug.

[…] Jeff schertz, MVP Lync nous fait part d’un très bon article sur ce point important de la nouvelle release de Lync : Lync 2013. Je vous livre ici une traduction libre de son article : http://blog.schertz.name/2012/07/video-interoperability-in-lync-2013/ […]

Great post as usual , Jeff – thanks for once again concisely tackling a complex subject

Great article, will be interested to see if there is any roadmap in Lync to integrate Personal Video (Skype) with the Lync solution. Although we also know Skype still needs a gateway to integrate with other vendors now that they are one Microsoft, this can help to bring video in reality to everyone/evrywhere.

If you look at the announced features for Lync 2013 you'll see that federation with Skype will be included. Only the IM, Presence, and Audio modalities are listed for support at launch of 2013 but one can assume that video will be added to that list in the future.

I still believe Cisco Jabber is a better product when talking about interoperability with videoconference and TelePresence systems.

I definitely agree with you David, Cisco Jabber is still the best product if you plan to set up video conf call. It's pretty easy to install and fast to configure.

Although the Cisco suite does have some inherent advantages 'interoperability' is certainly not one of them. I think we'll agree to disagree here 🙂 Also having configured many video products from Tanberg/Cisco, Polycom, and other vendors the Jabber suite is easily the most complicated. Take a look at Cisco's 50 page 'using certificates with VCS' guide as an example at how complex those products are.

I make you right about that Jeff.

Whats more, this is that good, im afraid im going to have to pinch it for teaching!!

Thanks

[…] Microsoft går nå bort fra sin propritære RTV protokoll og over mot etablerte standarder og det er også opp til de andre leverandørene å få på plass en gateway fri integrasjon. En meget god artikkel som forklarer Microsoft implementasjon av video standard og utfordringer som gjenstår kan leses her […]

I am having a tough time clarifying the following:

-For Lync to Lync P2P communication is the default codec now use SVC at UCConfig Mode 0 or Mode 1 and not using the RT codec at all? If so when if ever is the RT codec used?

-In a multipoint call is is the default codec now use SVC at UCConfig Mode 0 or Mode 1 and is each Lync client sending multiple unicast streams hence A is sending a stream to B and the same stream to C or is MS using some type of media relay technology, whereby A sends a single stream to the server and then B and C receive A's stream from the server?

-In an interop call, assuming the remote participant can talk MSSIP, what codec is used and at what resolution?, with R14 and earlier the answer is h.263, with R15 is it h.264 AVC (SVC Model 0) at 720p30 meaning that Lync to non Lync can now be HD without having to use RT?

All Lync 2013 calls will use H.264 by default. RTV will only be used in 2013 to Legacy (2010, 2007) peer calls, or when a Legacy client joins a multi-party conference call. In the conference call scenario 2013 clients will send two encoded video streams, one with H.264 for all other 2013 participants to utilize, and another with RTV for the legacy clients to see the active-speaker view they have always been compatible with. The Lync AVMCU does no video transcoding, so it is up to the endpoints to provide video in the formats needed by all participants. As I've already described the interop scenarios are not yet defined as video partners are still working with Microsoft on defining the operation.

Got it, thank you. So in call between 3, Lync 2013 endpoints (A,B and C) is A sending its stream to B and C as 2 separate h.264 SVC streams, or is it sending 1 stream to the AVMCU and AVMCU is providing to B and C in some type of media relay funciton?

Media sessions in multi-party calls are never peer-to-peer and this has been true since LCS. P2P media is only used in 2 party calls. In conference calls all media sessions are sent to the AVMCU, which relays the proper session(s) to each endpoint depending on their supported capabilities.

Thanks for this profound article.

I would like to connect our Lifesize videosystems (compatible with Lync 2010) to Lync 2013.

I have been told that I need a third-party MCU for that and a port-based license for every Lync-User joining a meeting. So I don't unterstand why it should not be possible to still use the built-in Lync-MCU (I've heard about AVMCU) beside a 3rd party MCU, which should be only the brdige to the Lifesize-sytem to Lync…

It would be great if you could help me with that… Thanks in advance!

The Lifesize systems do not support RTV natively and require the UVC video Engine to establish any video sessions with Lync 2013 clients. In Lync 2010 the H.263 codec was used for low-resolution interoperability but that option is gone with Lync 2013. Currently the use of RTV-enabled endpoints (like the Polycom HDX and Group) or an RTV enabled bridge (like the Polycom RMX or Lifesize UVC engine) are your only choices for video interoperability. Keep an eye out for details from Microsoft video partners at the Lync Conference next month for improved options in this area.

So in that case, what is the media flow for a 3 way call between Lync 2013 endpoints, If A is sending to the AVMCU, is the AVMCU providing a compositing function and then sending a single image to B and C (so A+B to C, B+C to A, and A+C to B)?

All media sessions are from client to MCU. The MCU does not transcode any of the video session like a traditional hardware MCU would. SVC provides for multiple sessions to be relayed to the clients. So in this example if all three clients are running Lync 2013 then they each send a single stream to the MCU (which may include one or more different resolutions in simulcast streams). Each client would receive two concurrent streams from the MCU of the other 2 parties. Lync 2013 presents these streams in their own windows as part of the 'Gallery' view. If a client switches to 'Speaker' view then it will drop the other incoming video stream and only ask the MCU to relay the active speaker's video.

Thank you, this sounds like SVC Media Relay. The layers in UCConfig Mode 0 or Mode 1 are very simplistic but if the AVMCU is making even the base layer available to multiple decoders it is a media relay function.

That is exactly what it is, a media relay MCU and not a transcoding MCU. The base layer will be available to any and all endpoints which can interoperate at the signaling level. If those endpoints happen understand any of the more advanced portions of the specification (e.g. Mode 1 in Lync 2013) then the additional benefits would be available to those decoders as well.

If a client switches to ‘Speaker’ view” options, if there any information presents in lync trace.

Then In lync 2013 – 5 participants meetings, 4 peoples are lync client another one for third party interface, server does not forwarding all participants video streams to third party but third party video’s gets all participants side.

If there is any information we needs to send?

No, this cannot be seen in the SIP signaling as that behavior is a function of the Video Source Requests (VSR) communications that are transmitted between the client and server in the RTCP channel.

Nicely writen post Jeff, I'll use it as a point of reference whenever I'm loosing the will to live after trying to explain the notion of off-platform video dialling to yet another confused customer.

Just one thing though, I'm not sure that chord progression would work so well if you skipped all the frames with the Bmaj in, even if it is on a bass 😉

Martin, sharp eyes (and ears as well I suppose)! Those are actually random frames from a video clip of mine taken out-of-sequence for illustrative purposes 🙂

Excelente artículo, y gracias por dejar tus comentarios en mi blog http://blog.asirsl.com

This is one fo the brilliant peice I have ever come across to…Thanks a ton Jeff.

Great Post.

The big question for me and alot of my clients is….

Will Lync 2013 natively communicate with Cisco Telepresence Video EndPoints using H264?

Cheers

If you read the final paragraphs in the article you'll see that I state that this is all up to the vendors themselves. All third-party products will need to be updated to work with the new UCConfig specification of H.264AVC/SVC first. So only Cisco can answer your specific question.

A Cisco/Tandberg AMG gateway for Video interop is a requirement now with the Lync 2013 client. Lync 2010 clients were able to register with the Cisco VCS and use H.263 encoding for video but it was removed. Cisco VCS does not talk the same H.264 config modes as Lync 2013 talks so even if one could register a Tandberg endpoint to Lync directly the two endpoints would not be able to negotiate video :(. Maybe Cisco will add additional capabilities for interop but that might be wishful thinking. Great Post BTW. kind regards

Currently the VCS does not even work with Lync 2013, so the AMG is not even applicable yet. If Cisco decides to update the VCS to interoperate with Lync the AMG will needed to provide RTV at minimum, or the VCS could be updated to handle H.264 AVC interop with Lync but that would also require updates to SIP, SDP, and RTCP protocols to work with Lync 2013. Only the RTP media payload of SVC in Lync 2013 is compatible with a standard H.l264 AVC decoder.

Hi,

Thanks for your article.

One question :

Cisco have a solution named AMG 3610 (advanced media gateway) who can transcode RTV to H.264 (and vice versa) There is a technical (or political…) limitation to 10 simultaneous HD transcodong calls (seriously).

==> do you think with H.264 SVC, limitations could be "removed"

I mean, convert H.264svc to H.264 would be easier (for the CPU, memory, …), right ?

Thx

I'm investigating

In theory if Cisco updates their interop solution and signaling code in the VCS to support the new SDP changes and H.264UC codec used by Lync Server 2013 than it's possible that the AMG would no longer be needed to provide standard or high definition video as the base layer of H.264UC supports the same media payload as H.264 AVC. But without those updates the AMG will be a requirement for 2013 interop as Lync 2013 has dropped the H.263/CIF option. Without RTV (or H.264UC) interop then no standards-based endpoints or conference bridges hosted behind a VCS can negotiate video with Lync 2013 clients; the AMG would be required for RTV transcoding at minimum.

Great article, can't wait for the next one Lync 2013 native video conference experience

Can i block video,allow only audio… since our internet is not so good. i want to block video… and audio only.

is it possible.

my server lync 2013. some clients 2010, some clients 2013.

Yes, you can limit calling types a few different ways in Lync.

Jeff, thanks this outstanding post on Microsoft Lync 2013 video interoperability.

Sir,

why can not we send the slide presentation from Lync client to radvision ?

thansk,

Lintong

Because the standards-based video system are not compatible with Microsoft's Remote Desktop Protocol which is used for content sharing from Lync clients.

[…] о новом кодеке H.264 написано у Jeff Schertz. Меня же это затронуло другим боком: у нас […]

Thanks for this wonderful write up. Lots of learning opportunities from your blogs.

Jeff, thanks for this article so incredibly detailed.

HI,Jeff

i have a problem,

i want intercommunicate with Lync2013 by H264,now My SDP in sip is: media description: 122 121 123

media attribute:x-caps:121 263:1920:1080:30.0:2000000:1;4359:1280:720:30.0:1500000:1;8455:640:480:30.0:600000:1;12551:640:360:30.0:600000:1;16647:352:288:15.0:250000:1;20743:424:240:15.0:250000:1;24839:176:144:15.0:180000:1

Media Attribute (a): rtpmap:122 X-H264UC/90000

Media Attribute (a): fmtp:122 packetization-mode=1;mst-mode=NI-TC

Media Attribute (a): rtpmap:121 x-rtvc1/90000

Media Attribute (a): rtpmap:123 x-ulpfecuc/90000

Lync respose same,but media is RTV,not H264, Thanks for the help

Lync does not interoperate directly yet a standards-based H.264 system as the implementation is unique (notice the X-H264UC declaration). A follow-up article will explain this in more detail but currently RTV is still used with any third-party solution which may support Lync 2013 interop.

hi,

thank you fro great article.

i have one Q. about HDX series which support (RTV, and H.264 AVC) will HDX integrate directly with Lync 2013 AVMCU using (mode0 , or RTV) or should i use gateway like (RMX, or Radvision).

The HDX , when supported with Lync 2013, will interoperate using RTV as the implementation of H.264 in Lync is not identical to the standard H264 declaration so additional development work would be needed to interop at even an AVC decoder level.

Hello Jeff,

If a third-party video conferencing endpoint is compatible to UCConfig and with Lync SIP, it can register directly on AVMCU of Lync and will be able to participate in the Lync view gallery, right?

So a third-party MCU or gateway will not be needed, right?

Is there any videoconference endpoint announced to support this?

If the Microsoft code including CCCP is supported by the endpoint then it can join the AVMCU call, but would need to be updated to actually receive multiple inbound streams and display the gallery view. Also the gallery view is limited to 5 active streams which are typically low resolution streams. If attempting to display high resolution for all 5 streams the inbound bandwidth could be as much as 8Mbps. With a traditional transcoding bridge you can provide up to 16 active speakers within a single 1080p stream which would not grow much above 2Mb.

Can you elaborate on CCCP and if that still has a play here, if an end point doesnt understand C3P can it join and experience the gallery view or it will be back to voice activated? How will content be shared? Will that work across the board whether you rely on SVC or AVC? For example a PLCM, Lifesize joins in SVC mode will content be shared, What if a Cisco joins using AVC? appreciate your input

CCCP is still utilized by Lync 2013 for AVMCU control messaging. If an endpoint does not understand CCCP then it would be limited to primarily peer calling in Lync and would not be able to participate in multiparty conference calls on the AVMCU. Content sharing has nothing to the video codec as Microsoft content sharing is provided through Remote Desktop Protocol (RDP). For more details on RDP and standards-based content sharing interoperability (H.239 & BFCP) take a look at the Polycom RealPresence Content Sharing Suite.

Hey Jeff!

Great post, i have a few questions:

At my corporate we use large amounts of Cisco endpoints and VCS is integrated to OCSR2.

1. HD video between Cisco and OCS is not working. According to my informations we need a gateway like Cisco AMG. Is that true?

2. Also we are upgrading to Lync 2013, which knows h.264. Will we get HD interoperabilty between cisco telepresence and Lync 2013 via h.264? Or we need some sort of gateway (like AMG, or radvision) anyway?

Thx.

Zoltan

Cisco/Tandberg endpoints require the use of a gateway like VCS or an intermediary bridge like the Polycom RMX in either Lync 2010 or 2013. I'm planning a brief follow-up article explaining this in more details but Lync 2013 is not out-of-the-box compatible with any standards-based video endpoint even though SVC in Lync 2013 is H.264 standards-based. There is more to interoperability then just having some compatibility with the media encoder/decoder.

Great Job Jeff,

thanks for updating others over the globe with your knowledge.i am confused where exactly i can have the 1080p Media Quality on P2P or Conferences.inside LS COntrol Panel and LS Powershell we have only CIF,VGA and 720p Media Quality Yet ! where we can set Media Quality to 1080p and which type of PC is recommended for encoding and decoding of 1080p and 720p Media ?

The configuration settings you are looking at are still the RTV settings and have nothing to do with SVC behavior. SVC video calls will automatically select amongst different resolutions based on the available bandwidth, so in order to prevent higher resolutions from being used you can customize parameters defined in the conferencing policies in Lync (these apply to both multiparty and peer-to-peer calls). See this article on TechNet for more details: http://technet.microsoft.com/en-us/library/jj2048…

[…] Excellent Video Reference – If you so desire to argue video interop in Lync and the use of SVC, I challenge you to reach out to fellow Lync expert and highly respected MVP Jeff Schertz for the video deep dive your heart desires. Hold on to your hat… http://blog.schertz.name/2012/07/video-interoperability-in-lync-2013/ […]

Great blog and input from others.

I've recently deployed Lync 2013 with the Avaya/RADVISION Lync gateway. I have 27 endpoints across my network and approx 75 Scopia Desktop/ iPad clients. The gateway is great as some of my legacy endpoints are 4-5yrs old and I didn't have the funds to change them out.

I now have the best of both worlds…Lync clients (internal and federated), RADVISION VC Units, Scopia Desktop Clients, Mobile clients and Polycom/Tandberg units all in one conference.

Interesting feedback, as the Scopia platform is not yet supported with Lync 2013. Seems like you are using the separate Scopia Desktop application which removes the video modality from the Lync client. Ideally the solution should leverage Lync as the only UC desktop client for all modalities.

[…] the article Video Interoperability in Lync 2013 the specifications of the complete H.264 SVC codec adopted by Microsoft were covered in great […]

Thank you for your great blog

Is it possible to fix the lync client aspect ratio 4:3?

Not sure what you mean by 'fix' the aspect ratio. The video's aspect ratio is a result of the camera's viewing area and the codec and resolution used to encode the video.

So what would be the solution to integrate Tandberg (cisco) C series endpoints, Polycom endpoints in a Lync 2013 architecture ?

Currently the only available supported solution is utilize the Polycom RMX to have all endpoints meet in a bridge. The Lync 2013 clients will utilize RTV for video sessions with the MX while the other systems will use some level of H.264 (typically AVC High Profile for the Polycom units and H.264/AVC Base Profile for the Tandberg units). The Polycom HDX and Group Series endpoints can connect directly to Lync 2013 and perform both peer-to-peer and conference calls with Lync clients (again still leveraging RTV at this point in time) but for the Tandberg units to be involved the RMX is needed.

Hi Jeff, When GroupSeries support Lync2013/SVC, will they not require RTV lic key?

I cannot comment on future plans which are not publicly announced, but the Options Key for Lync integration will be a requirement regardless of what protocols or codecs are available. The 'RTV' key provides all the Microsoft features including CCCP and other critical components. Also RTV support is still needed when interacting with Lync 2010 clients in a 2013 environment (think federated users, non-migrated users, older client devices, etc).

Hi Jeff, excellent article! I have a question. I am trying to implement video-interop with Lync2013 and I am noticing an issue in the initial SIP handshake itself. Basically the Lync2013 client reports "video was not accepted" after the other party accepts the video call. I tried very hard but I could not figure out why it does this. Do you have any ideas? I think you did mention that Lync 2013 has made changes in SIP signalling as well as RTP/RTCP so may be I need to make the corresponding changes on my side for RTP/RTCP? Any pointers? Thx

Jeff, I have a Lync 2013 client configured on Lync 2013 pool, connecting to RMX 2000 (v 7.6.0). My video goes garbled to the other participants. RMX properties shows that I am sending RTV 424×240, frame rate 14. As per your post, the Lync 2013 client would send two streams – one for H264 for other capable Lync 2013 clients and one with RTV for the legacy clients. So the RTV part is being sent to the Polycom RMX, but then not sure why the video is not reproduced to the other participants. Does it sound like I have to upgrade RMX 2000 to v 8.1 (or 8.2 latest) in order for my Lync 2013 clients to be able to participate in video calls?

Interestingly, if i use a low configuration computer which cannot process HD video, then my video goes in as RTV QVGA and then the other participants can view me fine. Could this be some other case of my Polycom RMX not accepting HD Video from Lync 2013?

Any help would be greatly appreciated. Thanks in advance…

You need to be on at least RMX 8.1 to support video calling with Lync 2013 clients.

[…] Microsoft has done a major overhaul on the video parts within Lync. Where in the past the Lync client supported codecs like H.263 and RtVideo, support for H.263 has stopped and next to RtVideo Microsoft now supports H.264.SVC with a specific SIP implementation. A great description on the Microsoft implementation of H.264.SVC can be found in the blog of Jeff Schertz, Microsoft MVP working for Polycom. The blog is called Video Interoperability in Lync 2013 and can be found here. […]

[…] Note: Many vendors only support H264 mode 0 while Lync's implementation of the layered H264 Mode 1 has many advantages. For an extensive and detailed deep dive on how H264 mode 1 works within Lync, I would recommend you to read Jeff Schertz's excellent blog post "video interoperability in Lync 2013". […]

You mentioned that Polycom uses H.264 high profile but later you mention base profile. Does Lync 2013 support High Profile, Main Profile, or only base profile encoding? Or does it depend on the camera you get like the Logitech C930e which supports H.264 SVC offloading?

Those are different types of profiles and have nothing to do with the cameras or UVC 1.5. Lync does not support H.264 AVC High Profile.

So if Polycom supports H.264 AVC High Profile but Lync 2013 doesn't support High Profile, does this mean that a Polycom to Lync peer-to-peer call has to drop to Baseline or Main Profile?

Neither. Polycom to Lync video calls will use either H.264 SVC or RTV depending on the product and version. There is no AVC intero; the profile is irrelevant.

But H.264 SVC just runs multiple layers of H.264 AVC. Your own blog post above says that H.264 SVC UCConfig Mode 0 uses a single layer of H.264 AVC. So we're still taking about H.264 AVC at the end of the day.

No, that is not the case. While the media payload might be based on AVC the implementation is completely unique and thus the need to support X-H264UC at some level, Lync does not advertise any standard H.264 codec support. The Network Abstraction Layer (NAL) data can be ignored by one side to basically 'drop' the additional SVC data so an AVC decoder can handle it but this does not work natively between H.264 AVC only endpoints and Lync clients. SVC is an annex of H.264 that is completely independent of the AVC profiles like baseline or high.

Take a look at this document http://bit.ly/1tWI6Nf for Microsoft Skype and Lync.

It says Constrained High Profile along with Constrained Baseline is required for logo qualification.

From that Skype & Lync document it reads:

Supporting the Constrained High profile will allow to:

– Achieve better rate-distortion performance by using CABAC, 8×8 transform, or quantizer scale matrices

– Achieve better quality by using QP for Cb/Cr channels

George, you are getting caught up in the details here. The point is that these are NOT compatible. The implementation of H.264 AVC High-Profile that is supported on many Polycom room systems is not directly compatible with H.264 SVC in Lync. It does not matter if the spec documents define capabilities across different codecs with similarly named profiles, the media payload is only one part of a much larger puzzle. The only third party devices directly compatible with Lync 2013 clients are utilizing X-H264UC in some capacity, or leveraging RTV for legacy compatibility. Lync clients cannot negotiate a video session with any systems advertising only H.264 AVC support.

So I have some Polycom Group 500s which should support X-H264UC. Then that should be directly compatible with the Lync 2013 implementation of H.264 AVC High Profile.

No, like I said that is not how it works. Those two codecs are not compatible.

I’m a big fan and follower

Thanks for your great information.

I’m a big fan

Thanks for your information.

Hi Jeff,

We purchased a polycom group500 for two sites and integrate it with SFB.

Problem: When initiate a peer to peer call we get the 1080P resolution, but we add any skype for business client into peer to peer call or initiate a meeting (means when involved the SFB AVMCU) than we lost the 1080P resolution.

we want to achieve the 1080P resolution even polycom devices in a peer to peer call or in a conference call because Microsoft said that the 1080P is now possible in a multiparty conference call.

Can you please guide me? Thanks in advance and would be much appreciated your prompt response.

Regard,

Asad Abbas

The Lync AVMCU will not send 1080p video in multiparty conference calls with more than a single participant when Gallery View is in use. Lower resolutions are transmitted by all clients.